What is the EU AI Act?

The EU AI Act is the first comprehensive legal framework regulating artificial intelligence in the EU. It categorizes AI systems based on risk levels (e.g., minimal, limited, high, and unacceptable risk) and sets compliance requirements accordingly. The Act aims to ensure safety, transparency, and accountability while promoting artificial intelligence innovation.

EU AI Act Timeline

The EU AI Act entered into force on August 1, 2024, with provisions gradually being applied based on AI risk levels and roles. Click here to learn more about the timeline.

02 Feb. 2025: Prohibitions and general provisions of the regulation on unacceptable-risk AI are applied.

02 Aug. 2025: Obligations for general-purpose AI (GPAI) models will come into effect.

02 Aug. 2026: Main body of the regulation will be effective, except for certain provisions related to high-risk AI systems.

02 Aug. 2027: Obligations imposed on high-risk AI systems become applicable.

End of 2030: AI systems that are components of large-scale IT systems in the areas of Freedom, Security, and Justice shall be brought into compliance.

Refer to: EU AI Act Article 113

Roles and Responsibilities

The EU AI Act assigns obligations to organizations based on their role concerning AI systems or General-Purpose AI models (GPAI). Therefore, understanding these roles and their corresponding responsibilities is essential.

Provider

- Role: A natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or places an AI system on the market or puts into service in EU/EEA.

- Responsibilities: Quality management system setup, conformity assessment, incident report, post-market monitoring, CE marking, documentation, etc.

Importer

- Role: A natural or legal person that is located or established in the EU that places an AI system on the EU market.

- Responsibilities: Certifications, system check & notifications, transparency, documentation, cooperation with authorities, storage & transport compliance, etc.

Distributor

- Role: A natural or legal person in the supply chain, other than provider or importer that makes an AI system available on the Union market, as part of the supply chain.

- Responsibilities: High-risk AI system verifications, system check & notifications, cooperation with authorities, storage & transport compliance, etc.

Deployer

- Role: A natural or legal person, public authority, agency or other body that uses an AI system under its authority.

- Responsibilities: Instructions for high-risk AI system use, human oversight, fundamental rights impact assessment, transparency, cooperation with authorities, etc.

Refer to: EU AI Act Article 3

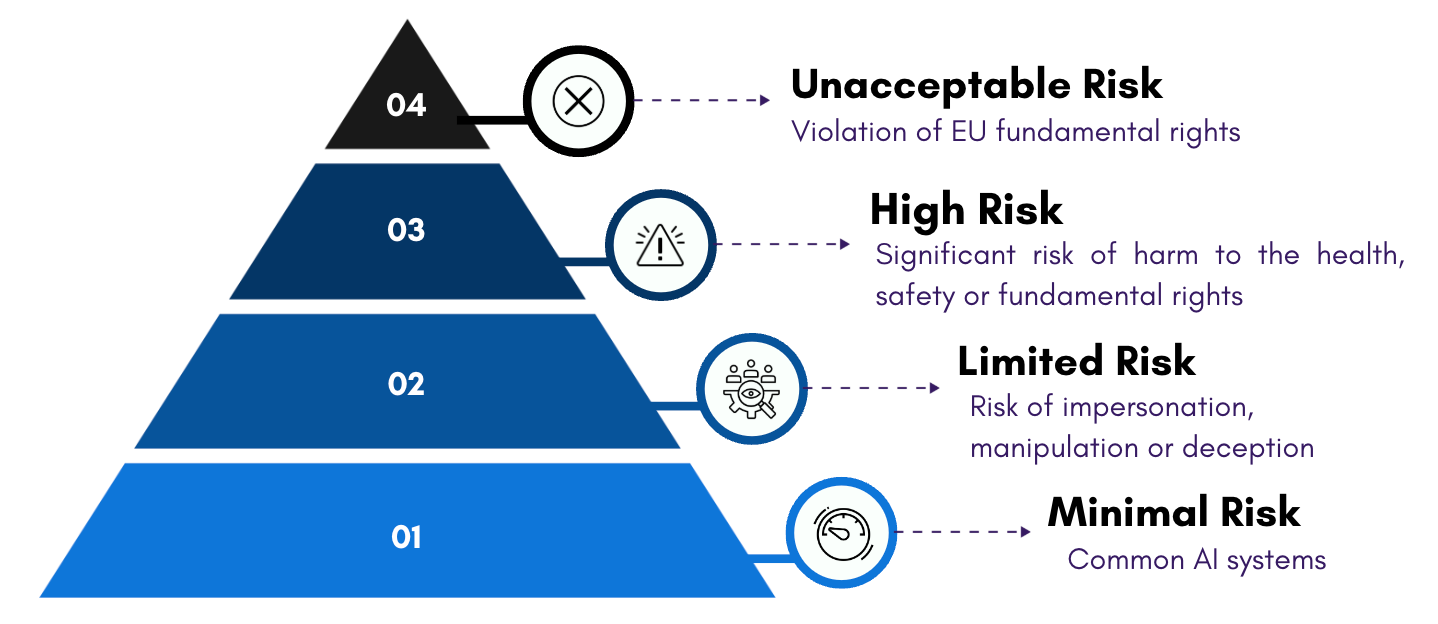

EU AI Act Risk Categories

The EU AI Act employs a risk-based approach to technology regulation, designed to keep up with rapid AI advancements. As a result, compliance obligations vary based on the level of risk, ensuring appropriate and proportionate protection for each system. AI systems are classified into four risk levels, as outlined below.

Unacceptable Risk

AI systems that pose a clear threat to people’s safety, livelihoods, and rights are strictly prohibited under the EU AI Act. These systems are banned from being placed on the market, deployed, or used within the EU. Below are the specific AI practices that fall under this prohibition.

- Manipulative and subliminal AI: AI systems that manipulate or deceive individuals beyond their conscious awareness.

- Exploitation of Vulnerabilities: AI systems exploiting vulnerabilities due to age, disability, or socio-economic situations to distort behavior.

- Social Scoring AI: AI systems classifying individuals based on social behavior, causing unrelated negative treatment.

- Biometric Categorization: AI systems categorizing individuals based on biometric data to deduce sensitive attributes.

- Predictive Criminal Profiling: AI systems solely profiling individuals to predict criminal behavior.

- Facial Recognition Database Expansion: AI systems expanding facial recognition databases via untargeted image scraping.

- Emotion Recognition in Workplace and Education: AI systems inferring emotions in workplaces or educational institutions.

- Real-Time Remote Biometric Identification in Public Spaces

Refer to: EU AI Act Article 5

High Risk

High-Risk AI Systems refer to AI systems that pose a significant risk of harm to the fundamental rights of natural persons and are required to undergo a third-party conformity assessment. Below are the key requirements for high-risk AI systems.

- Risk management system

- Data quality and governance

- Transparency

- Human oversight

- Documentation and traceability

- Registration in a public EU database

- Accuracy, cybersecurity and robustness

- Demonstrated compliance via conformity assessments

Refer to: EU AI Act Article 6

Limited Risk

Limited-risk AI primarily relates to transparency concerns in AI usage. It is essential that AI systems interacting directly with people, such as chatbots and deepfakes, are designed to ensure users are aware they are engaging with an AI system. An AI system is classified as limited risk if it meets one or more of the following criteria:

• It is designed to perform a specific procedural task.

• It enhances the outcome of a previously completed human activity.

• It identifies decision-making patterns or deviations without replacing or influencing prior human assessments without proper human oversight.

• It serves as a preparatory tool for assessments relevant to the use cases outlined in Annex III.

Refer to: EU AI Act Article 50

Minimal Risk

The AI Act does not impose regulations on AI systems classified as minimal or no risk, which make up the majority of AI applications currently used in the EU. Examples include AI-powered video games and spam filters.

General-Purpose AI Models (GPAI)

Understanding General-Purpose AI Models (GPAI) is crucial, as the EU AI Act introduces specific rules for GPAI models, particularly those with systemic risks. Companies must ensure compliance with the associated requirements.

What is GPAI?

AI models that display significant generality and are capable of competently performing a wide range of distinct tasks, regardless of how they are placed on the market, and that can be integrated into a variety of downstream systems or applications. The greater testing and reporting requirements are imposed on GPAI with systemic risk.

What is GPAI with Systemic Risks?

GPAI models that pose significant large-scale threats to society or the economy are classified as GPAI with Systemic Risks and must meet one of the following conditions: (1) they exhibit high-impact capabilities as assessed using appropriate technical tools and methodologies, or (2) they are determined by the European Commission to have capabilities or impacts equivalent to those classified as high-impact.

Key Requirements for GPAI Models with Systemic Risks

- Model Evaluation: Conduct model evaluation using standardized protocols.

- Risk Assessment: Assess and mitigate possible systemic risks at Union level.

- Cybersecurity Protection: Ensure an adequate level of cybersecurity protection.

- Codes of Practice: Rely on codes of practice.

- Confidentiality Obligations: Documentation shall be treated in accordance with confidentiality obligations.

- Documentation: Promptly report serious incidents and corrective measures.

Refer to: EU AI Act Chapter V

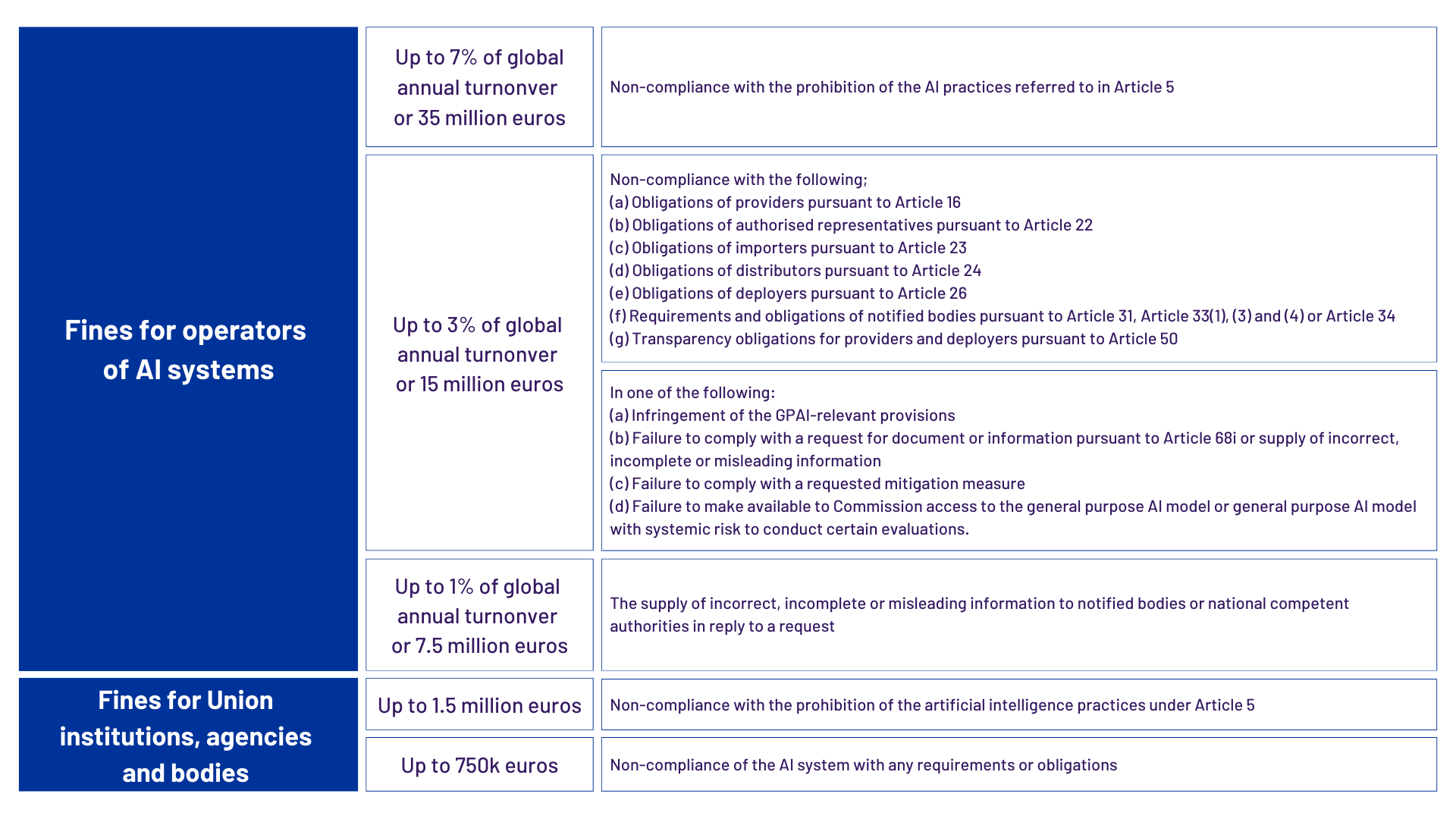

Penalties

Regulations regarding penalties will take effect on August 2, 2025. Below is a general overview of penalty amounts based on the type of violation and entity type.

- Prohibited AI Violation: Up to 7% of global annual turnover or 35 million euros

- Misleading Information to Authority: Up to 1% of global annual turnonver or 7.5 million euros

- Other Violations: Up to 3% of global annual turnover or 15 million euros

For small and medium enterprises (SMEs) and startups, the AI Act adopts a proportional approach, imposing lower fines based on their size, interests, and financial capacity. While the general penalty framework includes fines of up to €35 million or 7% of global annual turnover, SMEs and startups are subject to a “whichever is lower” principle, rather than the “whichever is higher” rule applied to larger companies.

Refer to: EU AI Act Article 99

References

- WilmerHale

- EU AI Act

- KPMG, Decoding the EU AI Act

- IAPP EU AI Act 101 Overview

- The EU Artificial Intelligence Act

- European Commision: Shaping Europe’s digital future

- Forvis Mazars

EU AI Act Cheat Sheet

Do you want to understand the EU AI Act summary at a glance? Become a member to download the Cheat Sheet for a quick and clear overview!